Benchmarking TLS, TLSnappy and NGINX

TL;DR #

I've created TLSnappy module which is going to be faster than internal TLS module in node.js. So far it's slower on some benchmarks, but it'll definitely be much snappier soon.

Preface #

Many people were complaining about tls performance in node.js, which (as they said) was significantly worse than in many other popular web servers, balancers and terminators (i.e. nginx, haproxy..).

Several things were done to address this issue, including:

- Disabling OpenSSL compression in node, see Paul Querna's article and Node.js commit

- Bundling a newer version of OpenSSL

- Enabling inlined assembly

- Using slab allocator to reduce memory allocation overhead

After all that stuff got in, rps (requests per second) rate was significantly improved, but many users were still unhappy with overall TLS performance.

TLSnappy #

This time, instead of patching and tweaking tls I decided that it may be worth trying to rewrite it from scratch as a third-party node.js addon. This recently became possible, thanks to Nathan Rajlich and his awesome node.js native addon build tool node-gyp.

I didn't want to offer a module that's functionally equivalent to TLS, but wanted to fix some issues (as I've perceived them) and improve few things:

- Encryption/decryption should happen asynchronously (i.e. in other thread). This could potentially speed up initial ssl handshake, and let the event loop perform more operations while encryption/decryption is happening in the background.

- The builtin TLS module passes, slices and copies buffers in javascript. All binary data operations should happen in C++.

All this was implemented in TLSnappy module.

There were a lot of availability and stability issues (and surely much more that

I'm yet unaware of). But tlsnappy seem to be quite a bit more performant than

the built-in tls module. Especially... when taking in account that tlsnappy is

by default using all available cores to encrypt/decrypt requests, while tls

module needs to be run in cluster to balance load between all cores.

Benchmarking #

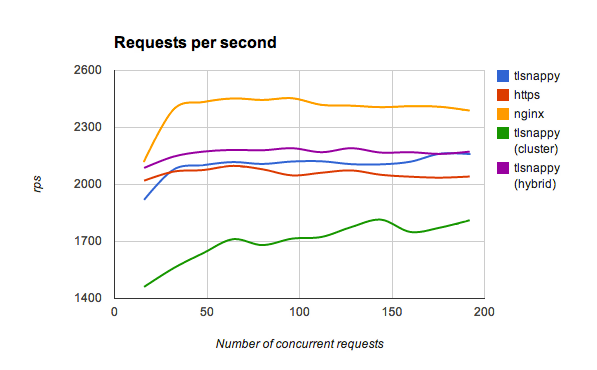

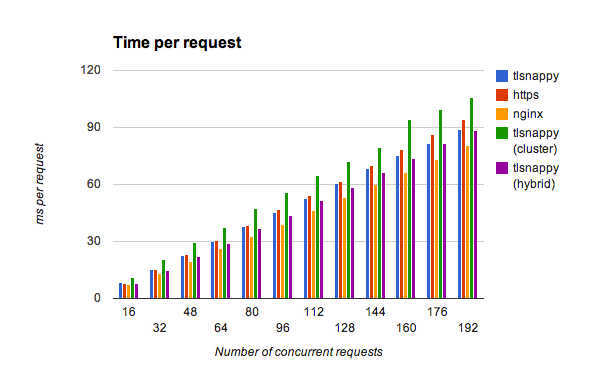

And I've confirmed that when I was benchmaring it with Apache Benchmark (ab) on my Macbook Pro and on dedicated Xeon server. Here a results from the latter one:

A little comment about curve names here:

default- one tlsnappy process with 16 threadshybrid- 4 tlsnappy processes with 4 threads eachcluster- 16 tlsnappy processes with 1 thread eachhttp- 16 node.js processes in cluster

As you can see tlsnappy is faster than tls server in almost every case, except

cluster mode (which just wasn't saturating CPU enough). Everything looked

great and shiny, until Matt Ranney has pointed out that ab results of

https benchmarks are not really trustful:

@ryah @indutny I was also mislead by "ab" with https benchmarks. I'm not sure what tool to use instead though.

— Matt Ranney (@mranney) September 29, 2012

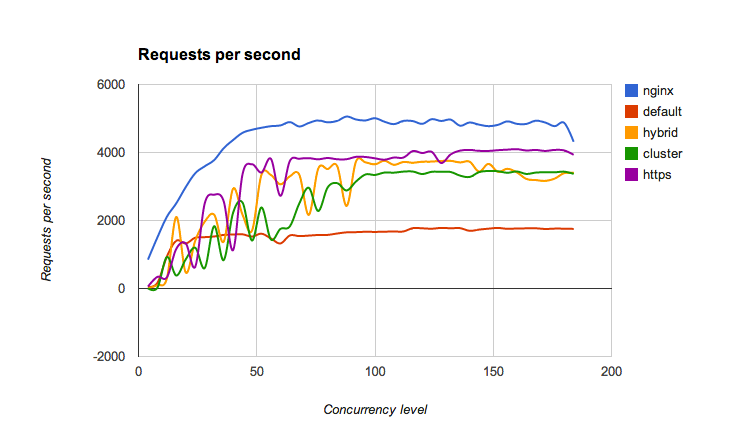

I've installed siege, created node.js script and let it run for some time:

Results are much better now (nginx was doing 5000 rps with siege and 2500 rps with ab), but now tlsnappy seems to be slower than node.js' default tls server.

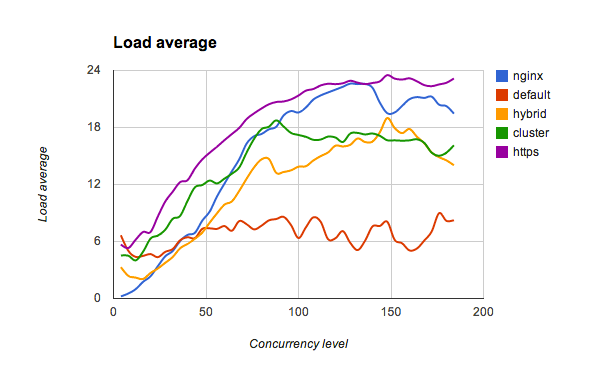

I started investigation and decided to track not only rps rate, but a CPU load too:

Afterword #

Right now, as you can see on the chart above, tlsnappy isn't saturating all CPUs well. I suspect this is a major reason of its relative slowness in comparison to both nginx and https module. I'm working on making it balance and handle requests better, and will sum up results of this investigation in the next blog post.

For those of you, who are interested in more details - here is benchmarks' data

- Next: To lock, or not to lock